Dev Story: Project Anywhere – Digital Route to an Out-of-Body Experience

This guest post was written by Constantinos Miltiadis, Founder of studioAny.

This guest post was written by Constantinos Miltiadis, Founder of studioAny.

What if you being here, could be a virtual presence anywhere? project Anywhere, developed by Constantinos Miltiadis, at the Chair for CAAD, ETHz, is an intention to breach the limits of physical human presence, by replacing kinaesthetic, visual and auditory with artificial sensory experiences, in a fully interactive virtual environment.

project Anywhere was featured in The Guardian (Project Anywhere: digital route to an out-of-body experience), The Observer Tech Monthly (Jan 11, 2015), Euronews Sci-tech / Project Anywhere: an out-of-body experience of a new kind [multilingual], Reuters video interview / Project Anywhere takes virtual reality gaming to new level, has been exhibited in a public installation at the TEDxNTUA events (Jan 17, 2015) and made 2nd place in Zeiss VR One Contest for Mobile VR Apps.

Overview

project Anywhere is a wireless, multiplayer, interactive, augmented reality application that uses smartphones as stereoscopic head-mounted displays. It was developed in Unity 3D, and also uses a Java program and Arduino microcontrollers.

project Anywhere from studio any on Vimeo.

Background and overview of the concept development

Studied architecture at NTUA in Athens and at ETH in Zurich, being a self-taught programmer, I was introduced to Unity 3D during a 3 day workshop at the Chair for CAAD at ETHz, by Sebastian Tobler of Ateo. I was initially sceptical as to what kind of projects it would help me realise, until I tried the Oculus Rift; the potentiality of which really amazed me. While wearing it, I lost connection with my physical surroundings in seconds and the first think that came to my mind, was to try and see my own body, and move in the virtual space by moving in the real one. But that wasn’t the case.

Searching to find the state of the art in VR, I couldn’t actually find any content that would allow for a greater degree of immersion into virtual reality. For me, at the time, it was a paradox that the game industry didn’t manage to emancipate from classical ways of interfacing (keyboards and joysticks) and even try to do some steps towards reinventing gaming for this new technology, rather than just appropriating it to older concepts.

It was then when I was first struck by the idea of developing project Anywhere. The goal was to prove that it is feasible to have your whole body controlling a digital avatar in a virtual environment in a 1 to 1 correspondence, in real-time. Therefore, I took it upon my self to try and develop a way to quantise my presence in physical space, and use it to “invent” intuitive ways to interact with a virtual environment.

I had to abandon the idea of using the Oculus Rift because of its cables, and decided to use a smart phone as a head-mounted display instead, for which I eventually prototyped a 3d printed phone-holder mask. For body tracking I implemented common Microsoft Kinect infrared sensors that would provide a vector set of the player’s skeleton joints positions. For the gestures and hand movements in space, I developed a pair of wireless interactive gloves, which would provide the palm orientation in space and the flexing of the fingers. The Kinect sensors and the gloves were brought together by a Java program I developed called “Omnitracker”, that makes sensor readings in a frequency that ranges between 20-40Hz (minimum every 50 milliseconds).

I have to admit, that initially I had no clear idea of how to do it, or even if it was actually feasible. In the 6 months however I spent developing it, I had to go through a lot of different trades; from prototyping, to micro controller programming, to advanced vector math etc. Step by step everything started falling into place, and networking was from the beginning a keystone, and a fearful issue to address. However, at some point I found the Photon PUN Unity plugin, which proved to be a seamless and consistent framework, and furthermore, in my case, one that could be deployed and implemented very easily, while not getting in the way of the rest of the development.

project Anywhere networking overview

From a networking perspective Project Anywhere doesn’t offer anything new. It just uses or “exploits” the real-time functionalities of the Photon Networking to the maximum. The functionalities that I extensively used were the barebones of networking: Photon’s Synchronisation cycles (Serialisation) and Remote Procedure Calls (RPCs).

Bear in mind that I am not experienced neither with game design nor with networking and thus I cannot claim that this is the ideal way to accomplish what I was going for. Nevertheless what I will describe in the following, is what for me got the job done, quite effectively.

Serialisation cycles:

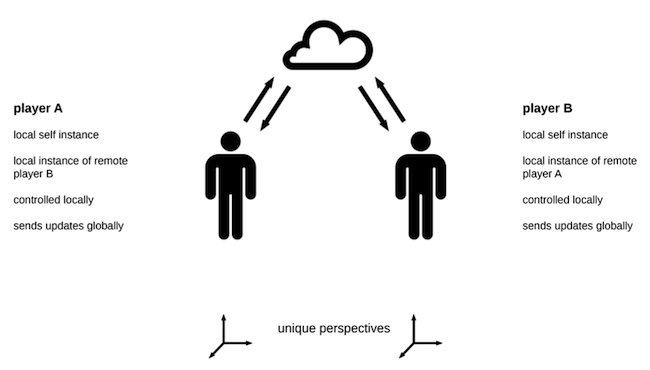

Multi-player synchronisation

In a traditional multiplayer game you have a bunch of players, each one controlled locally. Any changes (eg movements) have to be synchronised as fast as possible, and are therefore sent from the local machine that made these changes, through the network, to the instances of that same player, on the game running on the other players’ computers. So each player is controlling from their local machines, their clones, in all the remote ones (multi-player synchronisation diagram).

External input synchronisation

In the case of project Anywhere though, this cycle get a bit more complicated. You actually have input produced by the local device that is running the game (ie head tracking calculated by the accelerometer and gyroscope sensors of the phone), but the body movements and hand gestures of the player in space, are not inputed by a local keyboard and mouse, but rather provided by sensors connected to another device.

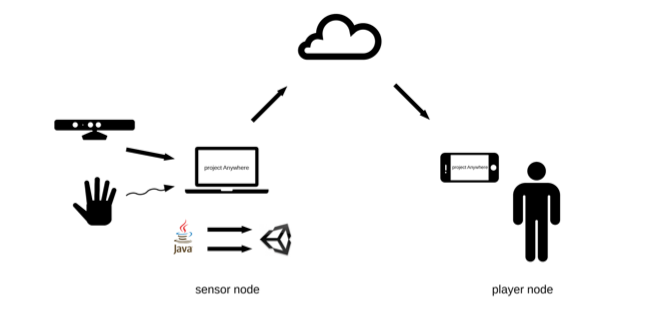

Therefore, project Anywhere has 2 types of players:

- The “subject node” which is the player of the game and joins through a mobile device,

- and the “sensor node”, a computer connected to the Kinect sensors and glove receivers which handles their data.

So the Subject instance has to get the sensor data, use them, and then forward them to its remote instances.

To accomplish that, the flow of information has to perform a larger cycle. The Sensor Node is running in parallel the “Omnitracker” Java program that receives and processes data from the Kinect sensors (player position), and from the interactive gloves (hand movements and gestures). These data are then forwarded to the Unity application, and then pushed to the Photon Cloud via Remote Procedure Calls (RPCs – sensor data synchronisation diagram).

On the other end, the local Subject instance related to a particular data set, receives the data and applies the transformations (body skeleton position, hand orientation, finger flexing). When this is done it, it performs the serialisation cycle, sending the updated information to the cloud, for the other devices to update their instances.

Sensor data synchronization

Further synchronicity issues:

The process described above, gets a bit more tricky, when we add to the equation other parameters that affect synchronicity such as sensor errors and different sensor output frequencies. In order for these update cycles to appear seamless and uniform, the data have to undergo various smoothening iterations. This is also the primary cause of latency, as seen in the video, that has been to some extend optimised since the first demo.

Any feedback is welcome: studioany.zurich@gmail.com